CUDA 1: GPU v/s CPU

Taking it a step further from the basics and comparing CPU's and GPUs!

In my previous post, “CUDA 0: From OS to GPUs,” I provided a brief overview of key terms in parallel computing and discussed the rise of GPUs. This article dives deeper into the technical differences between GPU and CPU operations, focusing on the mechanics rather than their applications. I also plan on diving deeper into the fundamentals of parallel computing and hope to break down the working of GPUs.

Understanding these differences and intricacies is crucial for truly grasping CUDA, because it will help us understand the sources of latency and potential areas for optimization — topics we will cover in future articles. We will conclude this piece with a primer on CUDA and a basic outline of what a CUDA program entails. Looking ahead, the next article will offer a step-by-step guide, from the fundamentals of CUDA programming to crafting neural networks from scratch using CUDA.

For this series, I’ll be assuming that you are familiar with basic C/C++ and Operating Systems. I’ll also assume that you are familiar with the usage of GPUs for Parallel Programming, although I’ll rehash a lot of it here.

Outline for this article:

- Differences between a CPU and a GPU

- Key Hierarchies of GPU

- Synchronization in GPU

- What is CUDA?

Picking up where we left off…

My previous article is linked here: CUDA 0: From OS to GPUs

Continuing with our game analogy, we can see how the workings of a CPU are similar to Method 1 — it sequentially processes instructions and is unable to truly move forward without the initial process being done. The sequential execution in CPUs, much like the first method in our game, can lead to inefficiencies, especially when handling tasks that are independent of each other. These inefficiencies manifest as wait times and task dependencies, where the execution of one process (person) must wait for the completion of another.

Even when multiple cores are used, these wait times and task dependencies are only shortened, not eliminated. Even though we are able to parallelize our tasks, the quantity of cores is just not enough on a CPU. Hold on to this thought because we will truly be able to understand CPU’s when we understand why complete parallelism of tasks is difficult for CPUs and how parallelism on CPU is not enough for our tasks.

Flynn’s Taxonomy

Without going too in-depth, let’s reiterate what you might already know from Flynn’s taxonomy — a system used to classify computer architectures based on how they handle the flow of instructions and data. We generally talk about four classifications: SISD, SIMD, MISD, and MIMD.

In terms of parallel programming, CPUs fall under the category of SISD (Single Instruction Single Data for single cores) or SIMD (Single Instruction Multiple Data for multiple cores). For parallel computing, SIMD is the obvious choice. In the SIMD context, a single instruction can be executed on multiple data points simultaneously, which helps us to improve our computation efficiency by doing multiple tasks in parallel.

Let’s look at the CPU architecture to understand how!

CPU: The Latency-Oriented Approach

When you look at the CPU, all you’re gonna find are Cache systems and Control Units. It indicates that CPUs are all about low-latency memory access patterns by using significant cache memory layers and being able to context-switch very quickly. It’s cores also are extremely powerful. However, this much power isn’t needed when we can’t process a lot of tasks together.

While multi-core CPUs try to parallelize our tasks, it is simply not enough when we have ML models with billions of parameters and computations. Each core in a multi-core CPU can handle its own thread of execution; however, the number of cores is much lower compared to the thousands of cores in GPUs. This limitation means that while a CPU can manage several tasks at once through its cores, the tasks are often still interdependent.

GPU: A Throughput-Oriented Design

GPU architecture is very similar to CPU with a couple of quirks to make it more throughput-oriented.

- It has a lot more Streaming Processors (SP), known as CUDA cores, packed in its Streaming Multiprocessor (SM).

- It only has two-level cache system as compared to CPU’s usually three-level cache system.

- Lastly, it has very small control units.

Let’s look more closely.

Physical Components (Compute and Memory)

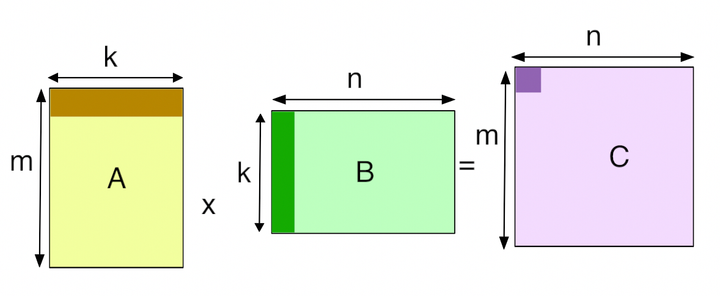

These are components that are physically on the GPU. At a physical level, GPU is stacked with Streaming Multiprocessors (SMs) and L2 Cache that is shared by all SMs. Each SM then has a bunch of Streaming Processors (SPs) or CUDA cores, L1 Cache and Shared Memory (SMEM) and on-chip/Register memory.

- Streaming Processors/CUDA Cores (SP): They are the main processing units of the GPUs that are in-charge of computing. They are the ones that do the tasks.

- Streaming Multiprocessors (SM): They are a collection of SPs. They manage and coordinate the execution of various tasks amongst the SPs it is in-charge of.

- Cache/Memory Hierarchy: we will revisit this in a bit!

Logical Components — GPU’s Compute Hierarchy

Logical components for a GPU refer to the terms coined for designing and understanding programs that run on a GPU. They make our lives, as programmers, a lot easier because we no longer have to worry about hardware intricacies. Thus, logical components are more-or-less abstractions of the physical components of a GPU. These also constitute as the GPU’s Compute Hierarchy:

- Threads: The smallest unit of execution in GPU — often analogous to CUDA cores — that perform actual computations. Each thread executes a portion of the program that is assigned to them by their warp.

- Warps: A warp leads a group of 32 threads. It handles and coordinates tasks/data points amongst the 32 threads that it is in charge of. It is responsible for executing a single instruction across 32 data points that are divided amongst the threads. It can also be considered a physical component since we have very little control over how the warp schedules and handles the tasks.

- Thread Blocks: A collection of threads that execute together and share local memory and warp. Threads within the same block can synchronize their execution to coordinate memory access.

- Grids: Higher-level collection of blocks that execute a kernel (GPU’s function). A grid can be arranged in one, two, or three dimensions to efficiently manage the execution of large datasets.

Memory Hierarchy

Until now, we discussed components that handle the compute (instructions and computations) given to the GPU. However, data access and storage is another huge component of a processor. Let’s discuss how memory is organized in GPUs.

- Registers (accessed by threads): Low latency and very small in size. These can only be accessed by threads and only store the variables needed by those threads. The scope is very limited but it is very fast.

- Shared Memory/L1 Cache (accessed by blocks): Although the two are separate memory containers, their scope is limited to thread blocks and, thus, are often grouped together. They are slower to access than registers but are still extremely fast. It is bigger than registers, but it can contain very limited and urgent information only.

- L2 Cache (accessed by all thread blocks): This is much bigger, but slower to get to. This is serves as the intermediate memory block between shared-memory and global memory.

- Global Memory (accessed by device): This is the largest memory in GPU and can be accessed by all threads in all blocks as well. However, it is very slow to get to which requires us to optimize usage of other memory stores a lot.

With so many components, the need for thread synchronization is evident in order to minimize latency and maximize performance. Let’s look at how synchronization works in GPU.

Synchronization and Asynchronization in GPUs

Imagine a school with 2048 assignments, 32 classes, and 32 students per class. The task is to distribute these assignments among the students, where each class receives 64 assignments, and each student is responsible for completing 2 assignments.

In this scenario, students represent threads, classes represent blocks, and assignments represent the data to be processed. Similar to how each class operates independently with varying completion speeds due to the differing speeds of students, GPU blocks work at different rates. Within each block, threads synchronize: they must wait for the slowest thread (the slowest student) to finish their assignment before all can proceed to the next assignment.

This synchronization is crucial because of the warp mechanism, where 32 threads (students) in a warp handle 32 tasks concurrently. When one thread (student) lags, it holds up the warp until it can proceed, embodying the concept of thread synchronization within the block.

Latency Hiding and Asynchronization

To manage inefficiencies that arise from synchronization, GPUs employ a strategy known as latency hiding. This mechanism allows the GPU to switch between warps — when one warp is stalled due to a slow thread, another warp takes its place, keeping the GPU cores active. This process ensures that threads are consistently busy, akin to giving a new set of assignments to another group of students when one group is delayed.

Thread Divergence

However, a challenge known as thread divergence arises in synchronization. This occurs when threads within the same warp need to perform different operations or face branching conditions, which forces them to wait for each other. Instead of processing multiple paths in parallel, the threads must process sequentially, causing a bottleneck. In order to solve this problem, we often need to focus on the code that we write and make sure they don’t cause divergent paths.

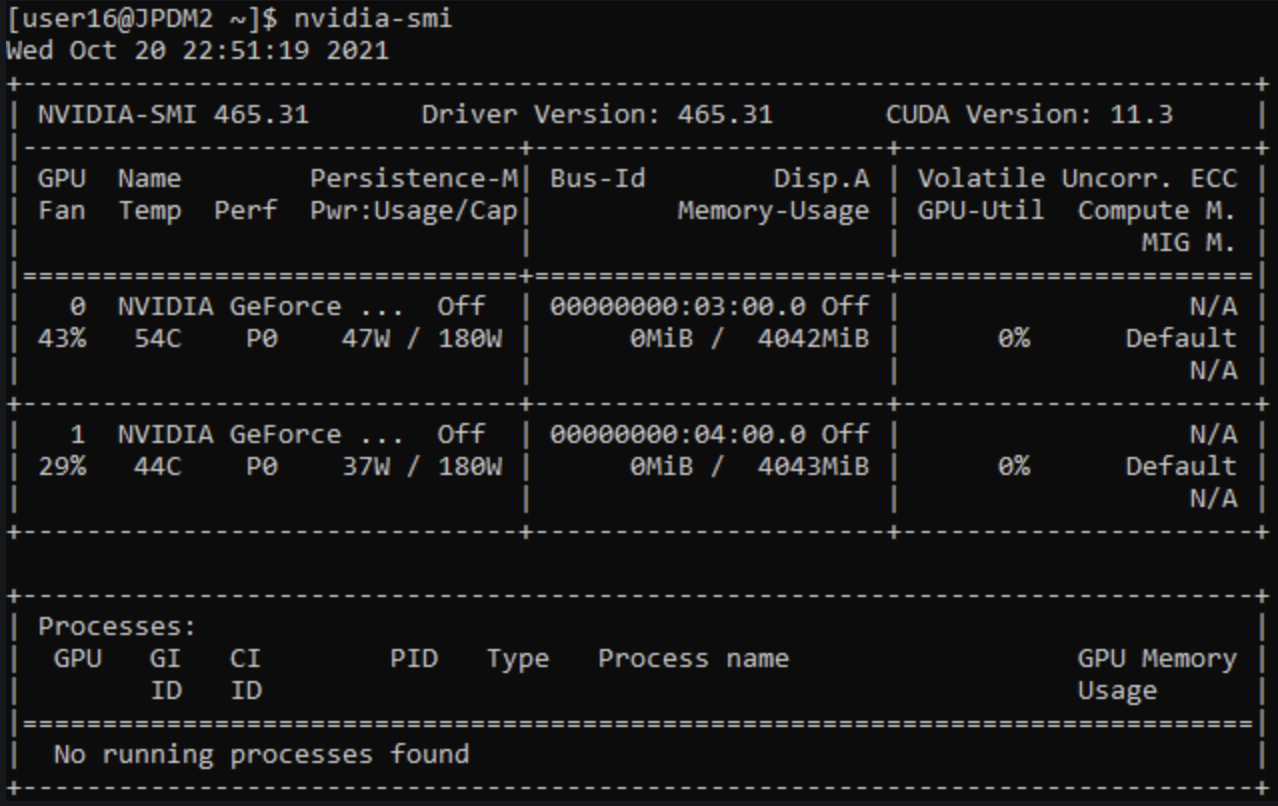

CUDA: Bridging the Gap Between CPU and GPU

CUDA (Compute Unified Device Architecture) is a parallel computing platform and programming model developed by NVIDIA that serves as a bridge between the capabilities of CPUs and GPUs. It allows developers to utilize NVIDIA GPUs for general purpose processing, an approach known as GPGPU (General-Purpose computing on Graphics Processing Units). CUDA gives programmers access to the virtual instruction set and memory of the GPU through CUDA kernels, which are functions executed on the GPU but initiated from the CPU. This model extends the functionality of GPUs beyond traditional graphical tasks, enabling them to perform complex computational tasks that were traditionally handled by CPUs.

The beauty of CUDA lies in its ability to make parallel computing more accessible and efficient. It abstracts the underlying complexities of direct GPU programming, providing simpler mechanisms for code execution on GPUs. With CUDA, developers can write code in popular programming languages such as C, C++, and Python, and execute intensive computations much faster than on a CPU. This capability is particularly important in fields like data science, simulation, and machine learning, where reducing computation time can significantly accelerate research and development. CUDA’s architecture leverages the massive parallelism of GPUs, facilitating significant improvements in computing performance by dividing tasks into thousands of smaller tasks that can be processed simultaneously.

Conclusion

Thanks for sticking around and I hope the basics are a bit more clear with this article! In the next piece, I hope we can start writing our first few lines of code in CUDA and also build a Neural Network from scratch!

Please stay tuned for my next posts about CUDA!

Resources

- https://csl.cs.ucf.edu/courses/CDA6938/s08/G80_CUDA.pdf

- https://github.com/CisMine/Parallel-Computing-Cuda-C/

- https://towardsdatascience.com/cuda-for-ai-intuitively-and-exhaustively-explained-6ba6cb4406c5

- https://web.archive.org/web/20231002022529/https://luniak.io/cuda-neural-network-implementation-part-1/

- https://developer.nvidia.com/blog/even-easier-introduction-cuda/

- https://www.nvidia.com/en-us/on-demand/session/gtc24-s62191/

- https://csl.cs.ucf.edu/courses/CDA6938/s08/G80_CUDA.pdf